・Yesterday’s attack caused a surge in I/O load, ultimately leading to a server outage

I investigated the system logs during the attack window (16:50–17:00) and confirmed that there were no kernel errors or I/O anomalies.

What repeatedly appeared were traces of numerous SSH login attempts, involving invalid users such as “pentaho”, “test”, “lokesh”, “brisklore”, and root—indicating concentrated unauthorized access attempts from multiple external sources.

For example, by running the following command:

sudo journalctl –since “2025-10-28 16:50:00” –until “2025-10-28 17:00:00” -o short-iso

I observed repeated login failure messages like the ones below:

Invalid user pentaho from 156.233.228.98 port 42898

Invalid user test from 47.180.114.229 port 59494

Invalid user brisklore from 103.61.225.169 port 39876

Invalid user lokesh from 47.180.114.229 port 34718

This is a textbook case of SSH brute-force behavior (password guessing).

Since the source IPs change every minute, it’s reasonable to conclude that the attack was automated and originated from a botnet.

・Emergency Countermeasure: fail2ban Deployment

To mitigate the threat, I urgently deployed and configured fail2ban.

fail2ban automatically detects and blocks brute-force login attempts.

My initial configuration blocks any IP that fails 3 login attempts within 10 minutes, banning it for 24 hours.

Configuration snippet:

~# sudo nano /etc/fail2ban/jail.local

write & save the following:

[DEFAULT]

# Ban duration (24 hours)

bantime = 24h

# Monitoring window (10 minutes)

findtime = 10m

# Allowed retries

maxretry = 3

# Ban action

banaction = ufw

[sshd]

enabled = true

port = ssh

filter = sshd

logpath = /var/log/auth.log

backend = systemd

~# sudo systemctl restart fail2ban

~# sudo fail2ban-client status

Status

|- Number of jail: 1

`- Jail list: sshd

~# sudo fail2ban-client status sshd

Status for the jail: sshd

|- Filter

| |- Currently failed: 0

| |- Total failed: 0

| `- Journal matches: _SYSTEMD_UNIT=sshd.service + _COMM=sshd

`- Actions

|- Currently banned: 0

|- Total banned: 0

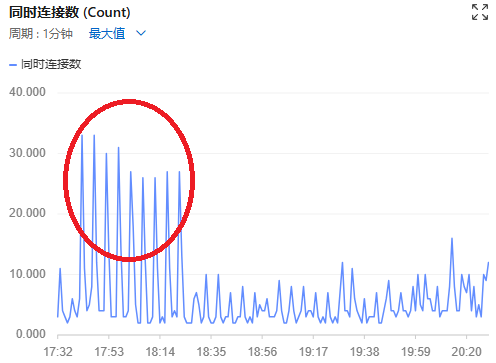

`- Banned IP list:・Today, the same attack pattern reappeared during the same time window, but thanks to fail2ban, the server has remained stable so far

fail2ban’s banned IP list:

~# sudo fail2ban-client status sshd

Status for the jail: sshd

|- Filter

| |- Currently failed: 1

| |- Total failed: 538

| `- Journal matches: _SYSTEMD_UNIT=sshd.service + _COMM=sshd

`- Actions

|- Currently banned: 24

|- Total banned: 24

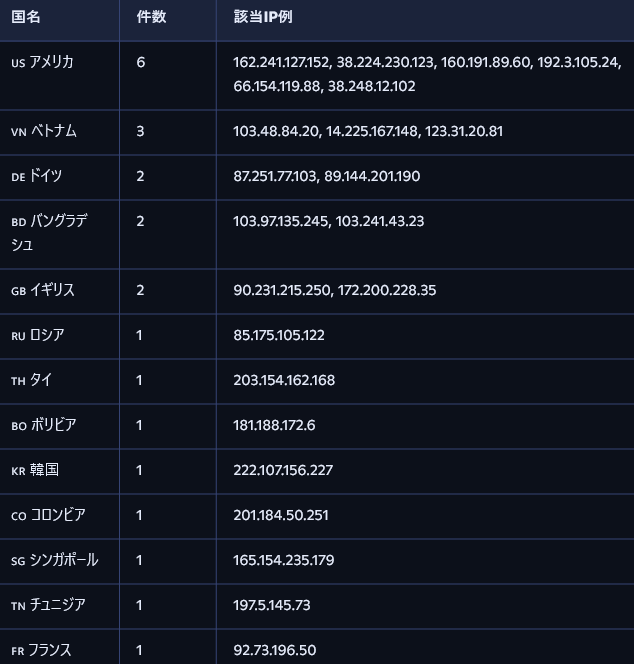

`- Banned IP list: 90.231.215.250 85.175.105.122 162.241.127.152 203.154.162.168 103.48.84.20 38.224.230.123 14.225.167.148 87.251.77.103 160.191.89.60 181.188.172.6 172.200.228.35 222.107.156.227 192.3.105.24 201.184.50.251 123.31.20.81 66.154.119.88 89.144.201.190 103.97.135.245 165.154.235.179 38.248.12.102 103.241.43.23 197.5.145.73 92.73.196.50 57.134.214.16Country-Based IP Attack Statistics (Descending Order):

As with yesterday, the majority of attacking IPs originated from the United States.

This suggests that many of the attacks are launched via VPS or cloud-hosted infrastructure.

・Planned Defense Enhancement

- Last night, I attempted to implement a GeoIP firewall to restrict SSH access to Japan-only connections.

- I ran: sudo apt install xtables-addons-common geoip-database -y. However, during the final stage of installation, compiling the

xtables-addons-dkmsmodule triggered high I/O load on this low-spec server, so I had to abandon the attempt for now.

- I ran: sudo apt install xtables-addons-common geoip-database -y. However, during the final stage of installation, compiling the

- Given the proven effectiveness of fail2ban, I plan to tighten its rules further.

- During the upcoming three-day holiday, I also plan to evaluate the following security enhancements:

- Changing the default SSH port

- Enforcing public key authentication and disabling password login

Comments